This Future of TV Briefing covers the latest in streaming and TV for Digiday+ members and is distributed over email every Wednesday at 10 a.m. ET. More from the series →

This week’s Future of TV Briefing features an interview with Coca-Cola’s Pratik Thakar about the brand’s AI-generated holiday ad that has been getting a lot of attention, for better and worse.

Prompt the feeling

This year’s trend of brands incorporating generative AI technology into ads and getting panned for doing so continues. First, it was Toys ‘R’ Us. Then Google. And now it’s Coca-Cola’s turn.

Last week the beverage behemoth debuted a holiday campaign that took a classic 1995 campaign and updated it by using generative AI tools to create the ads. Cheery as the ads are, they have received plenty of jeers – which Coca-Cola could have foreseen but also which did not greet the brand’s previous AI-generated ads. All of which has led me to wondering two things:

- Why did Coca-Cola feel this ad needed to be AI-generated (beyond shiny new toy syndrome)?

- How much of this ad was actually AI-generated? All of it?

So I put those questions to Pratik Thakar, global head of generative AI at The Coca-Cola Company, in the interview below.

But before we get to that, a disclaimer: My approach to covering the intersection of generative AI and content creation is that gen AI is a tool. And like any tool — be it a pencil or a gun — it can be helpful or hurtful, and that depends on how it is used. So my aim for this interview was, again, to understand why Coca-Cola felt a need to use generative AI to create the ads and what role(s) generative AI tools and humans played in the ads’ creation.

This interview has been edited for length and clarity.

Why did this ad need to be created using generative AI tools?

We were looking at different options for Christmas earlier this year, and then we zeroed into our iconic “Holidays are Coming” film. It needed some kind of an update because we wanted to go beyond Great Britain, where we used [the ad] quite a lot, regularly in the U.K.

Now, generative AI doesn’t do justice when we want to show something very realistic. But when we want to show something hyper-realistic and fantastical, gen AI is the perfect tool or technology. And this as just an idea itself, as a concept, it’s a fantastical idea. At Coke, we do very realistic work and very human work, and we do some magic work also. In this case, it was heavy on the magic side and bringing that real emotions of the human. And so we thought better we use this technology for this specific film and concept and create multiple versions because gen AI gives us that imagination superpower.

We wanted to experiment with multiple different production houses [Secret Level, Silverside AI and Wild Card]. So we went to three different [companies]; we thought one of them or couple of them will get it right. But all three of them got it right in different ways. It’s the same brief; the essence is the same.

What was the brief given to them?

There were four components. One was we wanted to take our 1995 “Holidays are Coming” from archive; we didn’t want to lose the essence. So we said, “Look, let’s create a real balance of what technology can do, but keep the humanity perspective also.” So like music, we said we can re-score it to match the film tempo, but we don’t want to create [the music] with AI. We will re-score it with real humans. So for all three films, we re-scored [with] three different sets of artists, musicians.

Why was that important? Like, why not use generative AI for the music as well?

Because we thought that we wanted to capture the video aspect, the imagery aspect, of the fantastical world. At the same time that earworm, that catchiness of the song is so powerful [that] we don’t need to fix it. And we wanted to keep certain elements balanced with humans also while we push the envelope on the visual aspect of the storytelling.

And that’s where we started pushing in terms of technology, like [RunwayML’s text-to-video AI generation tools] Gen-2 [and] Gen-3. The other one is Leonardo AI. This was all part of the briefing. Leonardo AI, we have created a special partnership where they opened up their [text-to-image AI tool] Phoenix. It was only available to us; whoever was working on [our] brief, they had access to that. So we wanted to stay ahead of the curve; we wanted to stay cutting edge. And then of course Luma Labs [was another generative AI company whose tools were used for the campaign].

We told everyone, “This is the brief from what we want to reimagine. We want to keep the balance.” Certain things we can push the envelope, and certain things we want to keep the original [like] the authenticity of the music. Then we told them that we want to go beyond just specific U.K. imagery, and we want to make it global. So you may have seen some of the imagery–

Like the monkeys. [Editor’s note: They’re called Japanese macaques.]

Yeah, of Japan and Route 66 and Grand Canyon, all those. That’s where we wanted to expand it. The fourth point was ethical prompting. We have created our internal ethical prompting guideline. So we actually told [the production companies], “Look, you need to follow and work with our guidelines.”

What are those guidelines?

As an example, I can’t say that I want to create an image of a polar bear with this particular scene in [a] National Geographic series. If I have to take anything, then we need to train it with our imageries of our trucks, our polar bear — whatever imagery we have, we want to train the model with that. I can’t say, “Do the Christmas decoration lighting like this particular film of Disney.”

So protecting your own intellectual property but then also respecting others’ IP.

And that’s very crucial. And we were very particular about it because the source imagery and how we fine-tune it it and make it look connected with our original “Holidays are Coming” film.” We wanted to keep that essence.

Now, in 1995 we couldn’t shoot real Northern Lights. Here, we wanted to show Northern Lights. We wanted to take our polar bear as part of our history and our heritage and show it in a more interesting way. So all those elements, we built it.

The other thing: We didn’t want to use children in the film because we have our own “not marketing to children” policy. So we actually use animals showcasing the human connections and human behavior basically as a metaphor.

So that was the brief from the tech and tools perspective, from the vision perspective, from the expansion perspective and from what we want to keep and preserve and what we want to expand and push and explore.

What was the part of the brief around fantastical imagery? Because I feel like that may be where a lot of the negative reaction to the ad is. Like, there’s a lot in there that feels like it could have just been captured regularly. So what are the fantastical elements?

You may have seen one of the imagery where a polar bear family is sitting and watching TV. Now that is a very good aspect of showcasing a family watching TV. The other one was showcasing the satellite saying “Holidays are coming.” Another thing is the puffins and all those elements.

We are not going to shoot all those animals ever. In the past, we have done a lot of polar bear films as a company. And of course we shouldn’t and never shot polar bears [on camera for] real. We have to do CGI [computer-generated imagery]. That was a cutting-edge tool at that time, and now it’s gen AI.

When it comes to generative AI being used, you could create an ad entirely from prompts. To what extent was that the case here as opposed to there being human-made illustrations, animations or even full live-action sequences that were created to serve as the basis for any of the AI-generated visuals?

We had an overall storyline that was basically a reference point from the 1995 film. We didn’t ask AI to [come up with] the plot. So that’s one. The basis, the foundations were actually coming from our archive, and it’s human-created.

The creative decisions were all made by humans. AI can throw multiple directions and different elements, but then you decide this is what we think is the right direction to go.

In one particular film called “Secret Santa” from Secret Level, we have put humans, and those are real humans. They are actual people. We actually took their consent, like a typical recruting any talent for the film. Now the reality is we don’t need too much time there, like a physical time. But they are real humans. We have their consent. And they exist.

Were they compensated?

Yes, absolutely.

The same amount as they would have been for a normal shoot?

Not really, I would say. But again, we paid them which is kind of an industry standard right now for this kind of production.

Were they SAG-AFTRA members? Because I know SAG-AFTRA has these protections in place, and I know there have been brands that have policies in place where if they are using humans and generative AI, they are working with a union like SAG-AFTRA to ensure people are being properly compensated.

I would come back on that, just to double-check with the production house. So let us come back to you. I can definitely confirm here those are real humans, we have consents from them and we have paid them.

[Editor’s note: According to a Coca-Cola spokesperson, “The Coca-Cola Company is not a signatory, authorizer, or otherwise bound by any collective bargaining agreements with SAG-AFTRA. This is Company-wide, not AI-specific.”]

I kind of alluded to there can be work going into the pre-production for these ads, like having visual bases. What was the work that needed to be done on the other end to take the AI-generated visuals and have them ready to run as the final ad?

Once we had the overall moodboard, we had to render it one by one. Rendering was that time [when] we had to say, “OK, this is not rendering well, and we have to come back and try again or change the prompting a little bit.” It’s a very much human exercise, which we wanted.

Obviously, not everybody is happy about brands using generative AI to create ads, specifically when the technology creates the actual contents of the ad versus being used for pre-production. But this wasn’t the first AI-generated ad that you all have made; I’ve covered the “Masterpiece” one and don’t remember there being pushback there. But this year, Toys “R” Us got pushback, Google got pushback. To what extent did you take consumer sentiment as well as industry sentiment into consideration with this ad?

We actually did extensive research in North America and Europe markets. They were both scored very well. Highly scored, actually, compared to many other films we have done in recent past. So consumer research was very, very favorable.

Now what is happening is one sector of the industry — I would say, more than consumers — are reacting. Because creative agencies, they are getting disrupted right now as an industry, and they are a little nervous, which is understandable.

Right now some people think that we just press the button and the film came out. That’s not the reality. We have a making-of video from Secret Level. We are going to share it with you. It gives a little more perspective from the production house and the creative director.

So yes, reaction is polarized. What we feel is it’s one sector of people, they immediately jumped into saying that we are just creating something very quick and it’s like pressing the button and the ad comes out versus how much work goes in. And that’s why this type of conversation, we need to do more. We feel that we haven’t communicated well, as the way we are communicating now, and we need to do that better.

The way we are all using CGI and Adobe, the same way people will be using AI, and there’s nothing wrong. As a company, as I mentioned, ethical prompting, right balance of humans driving the tool. And AI is a tool. It’s a tool made for humans.

What was the full timeline for this campaign in terms of creation up to launch? And what’s the ballpark in terms of how many people were involved in creating these ads?

We started this briefing in June. So June to October.

One part I haven’t explained, we have done hyper-local programmatic content. So if you are in L.A. and you are watching that film, you will see our Coke truck is passing by the L.A. highway and it says “Welcome to Los Angeles.” Twelve different cities will have that geo-located [content]. Those kind of work actually took a little longer because it’s also connected to media planning.

And usually we do one film for Christmas, but this time we [decided to] do three because we had more efficiency in terms of time and imagination.

And how many people were involved?

I would say average around 15 to 20 people per production house. And then the music re-scoring crew, [there] were like 20 of them, just the music group. Because you need all the instrumentalists and vocals and everything.

There were a lot of people actually [who] worked on this. VFX artists. These studios, they are not big, large, gigantic companies. Silverside or Wild Card, they have like a one-office operation. And that’s good, actually, to work with those kind of people. Those are like individual local business.

Coming out of this campaign, the work that went into it as well as now the reception it’s been getting, is there anything you would do differently in the future when it comes to using gen AI technology in ad creative?

One thing I would definitely do, as a team we would do better, is basically communicate with people like you much [in] advance and tell our story in much more detail.

Number two, I would say we could balance — we got balance of technology we will keep using. Now not for everything. This film, it was a perfect film to use AI. Now, sometimes we need real humans eating a family meal, and we won’t use gen AI for that. We will shoot real people and in a more traditional way. “Masterpiece” required the technology. Technology was in service of storytelling. It was not the other way.

But we are happy — actually, we are super happy and proud of creating this work, internally, and that’s why I’m talking to you. We are super proud of creating and pushing this envelope. And maybe there’s an inflection point right now for the industry. We need to keep pushing the envelope to stay ahead of the curve.

What we’ve heard

“I don’t know if I was supposed to disclose those AVOD numbers.”

Traditional TV watch time grows while streaming stagnates

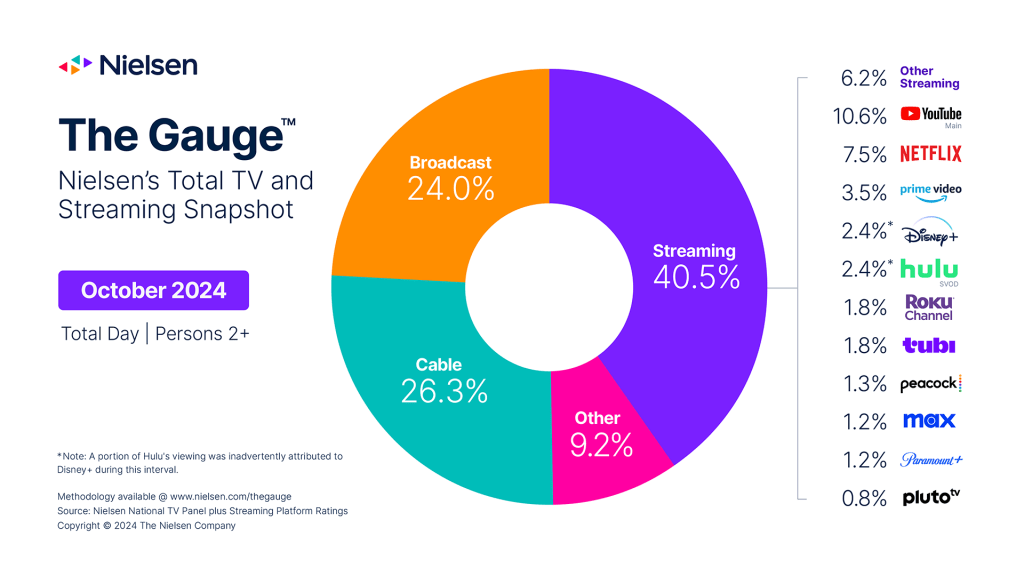

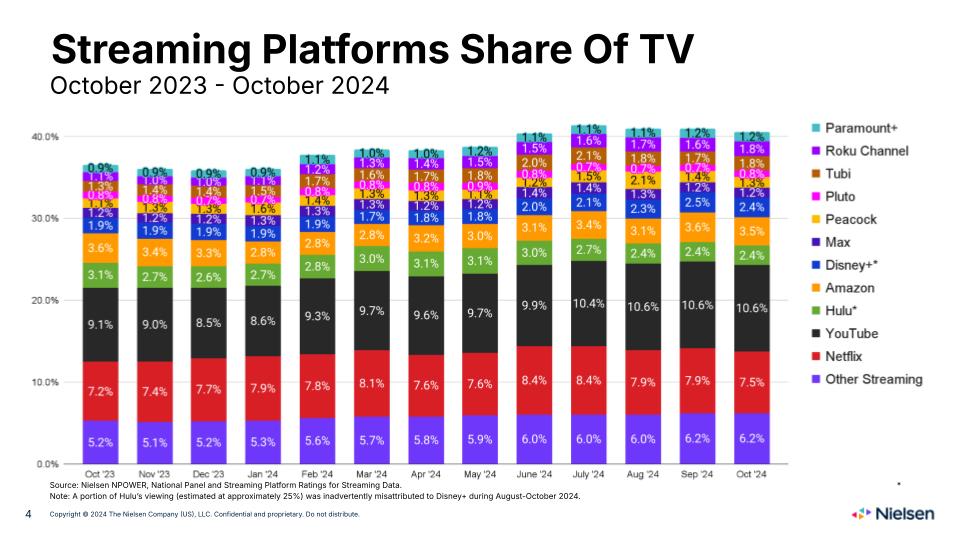

People still spend more time watching streaming services on TV screens than traditional TV networks. But per Nielsen’s latest The Gauge viewership report, in October they spent more time watching broadcast and cable TV networks than they had in September — which may not be saying much or is saying plenty about the state of traditional TV.

OK, that chart isn’t exactly eye-popping. Broadcast and cable TV still lag streaming’s watch time share by a healthy margin. But what that chart doesn’t quite capture is that broadcast TV turned in its biggest watch time month since January and its third straight month of month-over-month watch time growth. Meanwhile cable TV notched its first month-over-month increase since April.

Having said that, traditional TV watch time kinda shoulda been higher in October versus September. The MLB playoffs kicked off to join the NFL and college football seasons. Broadcast TV networks’ fall seasons were fully underway. And of course, there was all the political coverage as the U.S. presidential election neared.

“On a yearly basis, cable news was up 17% compared to October 2023, while cable sports was up 14%,” said Nielsen in a press release announcing The Gauge report for October.

As for streaming, the category actually ceded some of its watch time share in October. OK, half-a-percentage point. Which isn’t a ton. But there wasn’t a ton of movement in the streaming watch time rankings in general. Of the Netflix surrendered the most share at 0.4 percentage points, while The Roku Channel secured the largest month-over-month gain with 0.2 percentage points. Beyond that, YouTube held its lead and Pluto TV stayed under the 1% threshold. Steady as ever.

Numbers to know

>60 million: Number of households worldwide that streamed the Mike Tyson-Jake Paul boxing match on Netflix.

120 million: Number of subscribers that Disney+ had at the end of the third quarter of 2024.

37%: Percentage share of new U.S. Disney+ subscribers in Q3 2024 that signed up for the ad-supported tier.

<50%: Percentage cut that Paramount is seeking in the fee it pays to use Nielsen’s measurement service.

What we’ve covered

How independent agencies are staking their claim in the creator economy:

- The BrandTech Group, BrainLabs and Stagwell are among the agencies to have acquired or formed agencies oriented around creators.

- The moves are meant, in part, to help the agencies avoid being disintermediated by influencer marketing agencies.

Read more about indie agencies and creators here.

How influencer shops and agencies are adding content studios to boost production speed, revenue:

- Some influencer agencies have built or expanded physical studio space for creators to use for filming.

- The studios are meant to make it easier for the influencer agencies to quickly churn out content.

Read more about influencer agencies’ content studios here.

TikTok pushes to attract SMBs and its latest advertising tool might just do the trick:

- TikTok has officially launched its Symphony Creative Studio.

- The AI-powered video generator tool enables advertisers to create TikTok videos in minutes.

Read more about TikTok here.

What a new TV show and its parallel mobile game say about the future of entertainment across media:

- Genvid Entertainment has connected its “DC Heroes United” streaming show with a mobile game that lets people vote on plot lines.

- The show will debut on Tubi on Nov. 21.

Read more about “DC Heroes United” here.

Influencer spending increases in Q4 after election blackout as brands integrate channels, commerce:

- A majority of surveyed brands said they plan to spend more money on influencer marketing next year.

- A potential TikTok ban in the U.S. still leaves some influencer dollars up for grabs in the new year.

Read more about influencer marketing spending here.

What we’re reading

A year and a half after Netflix struggled to live-stream a “Love Is Blind” reunion special, it ran into technical issues with its live broadcast of the Tyson-Paul fight with a little more than a month to go before the streamer’s biggest livestream to date: an NFL game on Christmas Day with Beyoncé as the halftime performer, according to The New York Times.

Spotify’s video creator monetization program:

The streaming audio service will start giving video podcasters a cut of revenue from ad-free subscribers, though it’s unclear how Spotify will calculate creators’ cuts, according to The Verge.

Google’s video service has become a more popular platform for people in the U.S. to listen to podcasts than Spotify or Apple Podcasts, according to The Wall Street Journal.

The TV conglomerate and measurement provider remain at a stalemate after failing to agree on renewal terms last month, according to Ad Age.

Warner Bros. Discovery vs. NBA:

The TV conglomerate and sports league have settled their fight over WBD’s lapsed NBA rights deal by agreeing to give WBD some international rights to air NBA games as well as some access to NBA content, according to CNBC.