Nvidia CEO Jensen Huang’s keynote at NVIDIA’s GTC conference in Washington D.C. was a sweeping celebration of American innovation and a call to action for America to lead the AI revolution. NVIDIA at the epicenter of this next Apollo moment. The 90-minute talk blended technical deep dives, major announcements, and forward-looking visions for AI, quantum computing, 6G, robotics, and manufacturing. It emphasized two simultaneous platform shifts: from general-purpose to accelerated computing and from traditional software to AI agent driven work.

America’s Innovation Legacy and AI as the New Industrial Revolution

Huang narrated and opened with an emotional video tracing U.S. inventions (transistor at Bell Labs, Hedy Lamarr’s wireless tech, IBM System/360, Intel microprocessor, Cray supercomputers, ARPANET, iPod/iPhone). He tied this to AI as the next era powered by NVIDIA GPUs as essential infrastructure like electricity and the internet.

AI factories are rising in America to provide abundance, life-saving tech, clean energy, and space exploration.

AI is a new industrial revolution testing U.S. capacities like the space age.

NVIDIA’s accelerated computing (GPUs + CUDA) is the engine, addressing problems CPUs can’t solve amid Dennard scaling’s end. Transistor performance plateaued ~2010 but transistor counts grow.

Accelerated Computing: NVIDIA’s 30-Year Journey

Huang explained the GPU-CPU shift as a rare paradigm change. THE major shift in 60 years of transistors and chips. GPUs enable parallel processing for massive transistor utilization. CUDA programming model ensures compatibility across generations, with 350+ CUDA-X libraries. There is cuLitho for chip lithography, cuOpt for optimization, MONAI for medical imaging, cuQuantum for quantum sims rewriting algorithms domain-by-domain.

Reclaiming U.S. Leadership in 6G

U.S. once dominated and defined wireless standards but now relies on foreign technology. 6G is a once-in-a-lifetime chance for American resurgence via AI-native networks.

NVIDIA Arc and Nokia Partnership was announced. New product line NVIDIA Arc (Aerial Radio Access Network Computer) integrates Grace CPU, Blackwell GPU, and ConnectX networking with Aerial CUDA-X library for software-defined, AI-programmable base stations.

AI for RAN provides spectral efficiency via reinforcement learning, cutting 1.5-2% global power use. AI on RAN for edge cloud and for industrial robotics. Nokia will integrate Arc into future base stations, upgrading millions globally. Compatible with Nokia’s Airscale for seamless 5G-to-6G transition.

Quantum Computing: Hybrid GPU-QPU Future

Referencing Feynman (1981), Huang noted last year’s breakthrough. Three is now one stable, error-corrected logical qubit which uses 10s-100s physical qubits. Qubits are fragile; error correction needs massive compute.

They explained qubit fragility across types (superconducting, photonic, etc.) and quantum error correction’s needs.

NVQLink is a new interconnect for terabyte-scale data transfer between QPUs and GPUs for correction, calibration, hybrid sims).

NVQLink + CUDA-Q are a scalable architecture for 100s-1000s qubits. CUDA-Q (extended from CUDA) enables QPU-GPU orchestration in microseconds. It is supported by 17 quantum firms (like publicly traded IonQ, Quantinuum) and 8 DOE labs.

U.S. Department of Energy will build 7 new AI supercomputers with NVIDIA to accelerate science research (AI-augmented simulations + quantum).

AI is Reinventing the Computing Stack

Past software (Excel, browsers) were and are a $1 trillion tools market. AI and agents will perform work in the $100T economy.

AI Factories are the AI data centers for training and inference. The new infrastructure is producing valuable tokens. These smart outputs are at scale with cost efficiency. Unlike general data centers, they’re specialized. The energy → GPUs → tokens → apps.

There are now three major Scaling Laws.

1. Pre-training (memorization)

2. Post-training (skills/reasoning)

3. Thinking (inference/research).

There are exponentials. Smarter models need more compute. Usage surges with intelligence/paywalls (Cursor, Claude).

Virtuous cycle: Usage → revenue → more compute → smarter AI → more usage.

Dennard/Moore’s end demands extreme co-design. We have to rearchitect everything at the same time (chips/systems/software/models/apps simultaneously).

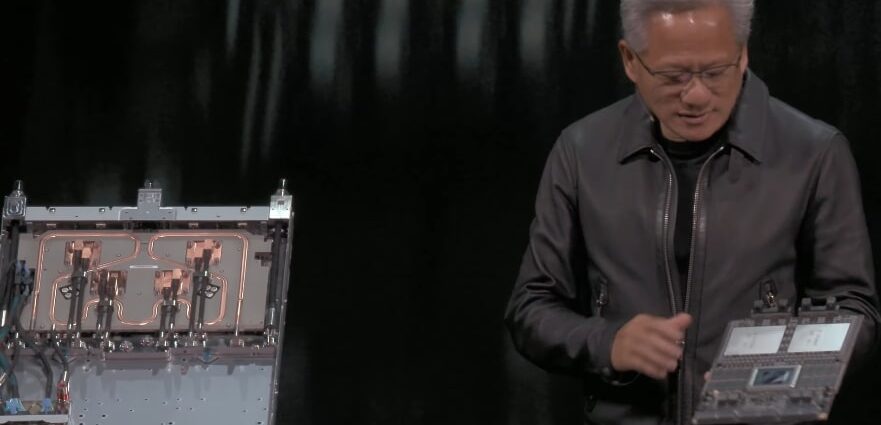

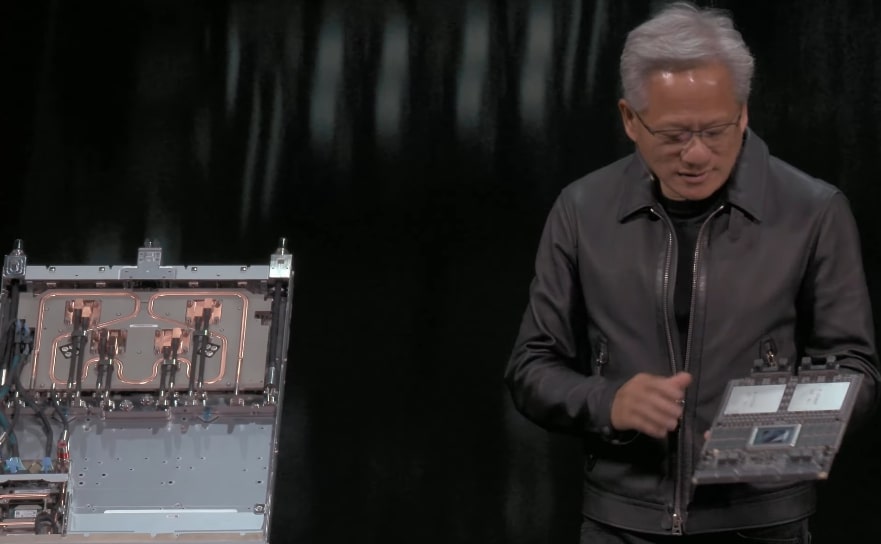

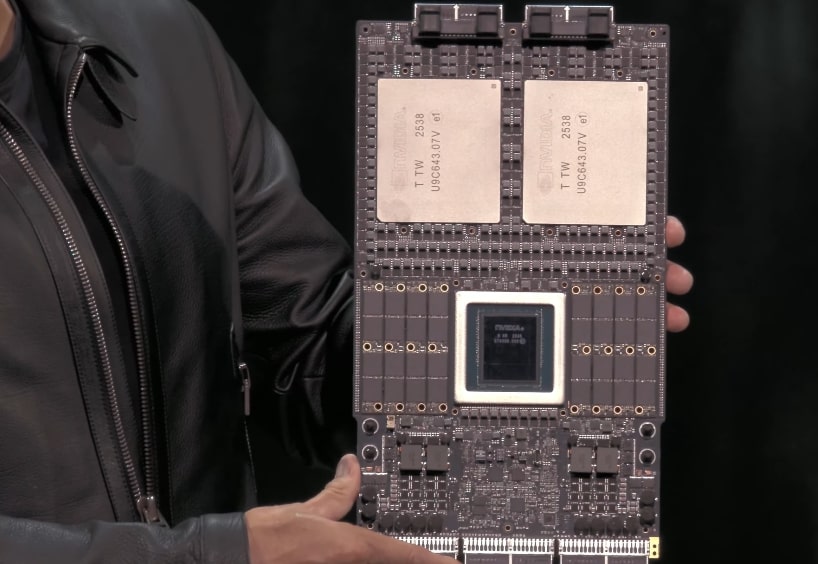

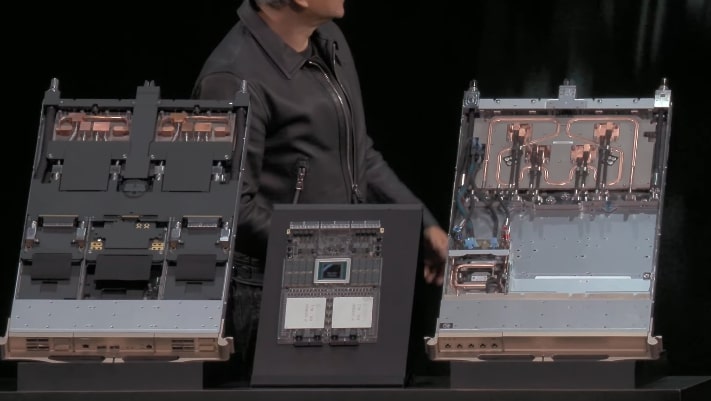

Blackwell and Vera Rubin Chips

Grace Blackwell NVL72: Rack-scale “thinking machine” (72 GPUs as one via NVLink; 130 TB/s bandwidth).

10x Hopper performance per GPU according to SemiAnalysis benchmarks.

B200 has the lowest-cost tokens via TCO (total cost of ownership).

Nvidia has $500 billion of firm orders for Blackwell/Rubin through 2026. 20M GPUs will be shipped vs. Hopper’s 4M lifetime chips shipped. This excludes China and the US and China may get an agreement where Nvida could send China chips.

CSP (cloud service providers) capex (Amazon, CoreWeave, etc) hits record highs.

Blackwell is and will also be made in America. U.S. production. Arizona has fabs working now making silicon semiconductors and Indiana is making HBM (high bandwidth memory).

Texas assembly of 1.2M parts/rack, 130T transistors and weighs 2 tons.

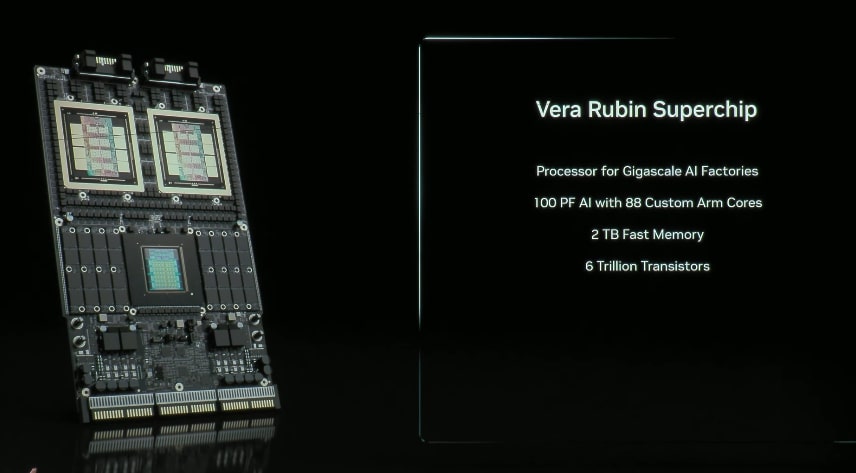

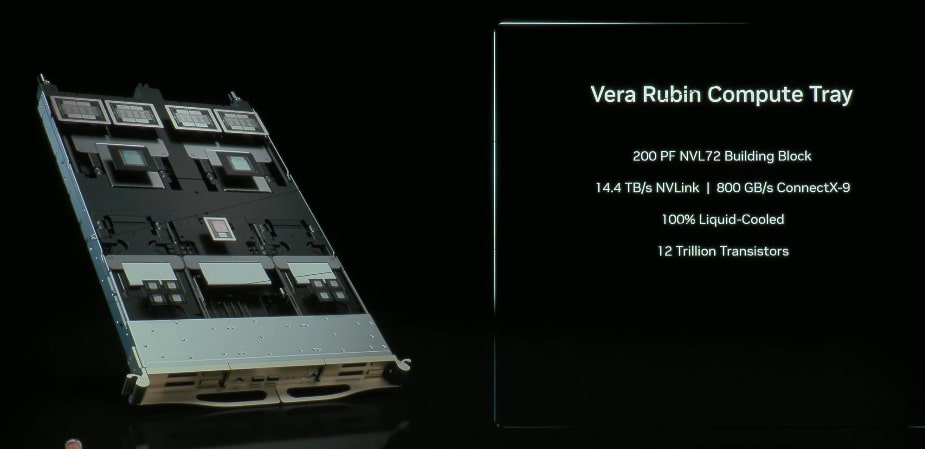

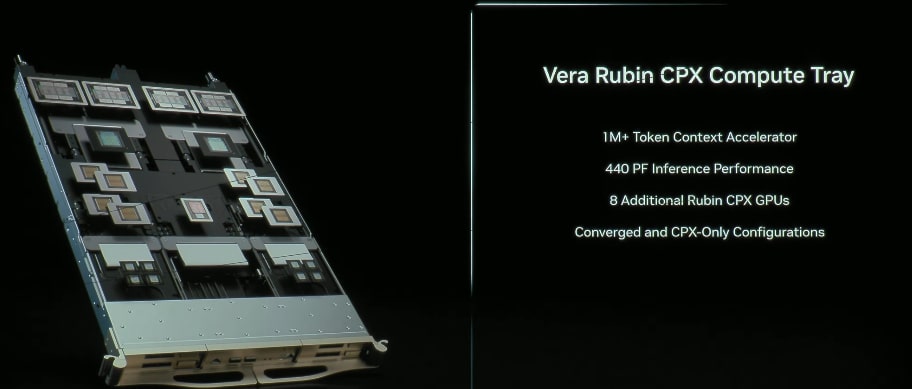

Vera Rubin is the Next-gen chips with 2026 production. It will have 100 petaflops of performance per chip.

If is completely cableless.

100% liquid-cooled.

Features Vera Rubin Superchip (8 GPUs/node).

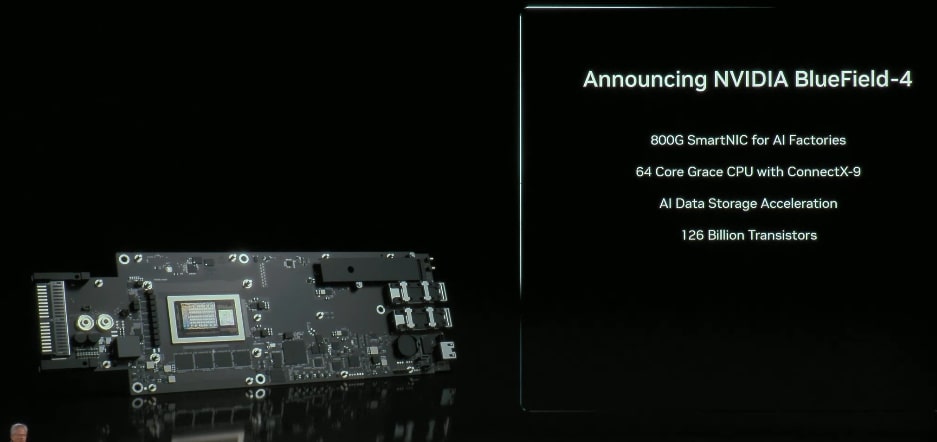

BlueField-4 is a KV-cache processor for context/memory. Need the KV-cache to remember and quickly retrieve all of the conversations.

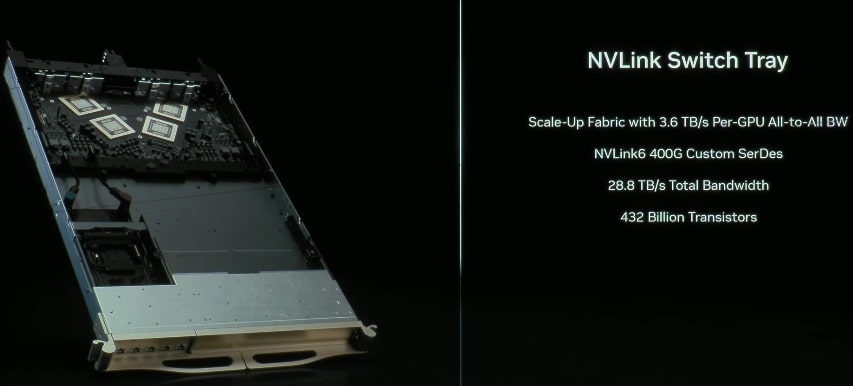

NVLink/Spectrum-X switches (silicon photonics).

1.5M parts/rack. Scales to gigawatt factories. It is 8000 racks per Gigawatt.

Omniverse DSX will Make AI Data Centers

Nvidia Blueprint for gigascale AI factories.

Co-design building/power/cooling with NVIDIA stack.

Digital twins (via Omniverse) simulate/optimize (Jacob’s layouts, Siemens/PTC assets, Ansys/Cadence thermals).

Partners, Bechtel and Vertiv will deliver prefabricated modules. AI agents (Fidra, Emerald) operate post-build.

They will saves billions/year.

NVIDIA is building a Virginia research center for data center and AI factory research.

Open Models and Ecosystem

Open models (via reasoning/multimodality/distillation) fuel startups/research.

NVIDIA leads contributions with 23 leaderboard models: speech, reasoning, physical AI, biology.

Essential for U.S. leadership.

NVIDIA is in all clouds (AWS, Google, Azure, Oracle).

SaaS (ServiceNow, SAP, Synopsys, Cadence).

Startups (CoreWeave, Lambda) build GPU clouds (neocloud providers).

CrowdStrike is working with Nvidia for AI agents for speed-of-light cybersecurity (cloud/edge detection).

Palantir and Nvidia are accelerating ontology platform for massive data processing (structured/unstructured) in government and enterprise.

Physical AI: Robotics, Factories, and Robotaxis

GB200 (training), Omniverse (sims/digital twins), Thor/Orin (operation) are all CUDA-enabled for physics-aware AI.

Foxconn’s Houston NVIDIA plant was made with Omniverse and Siemens.

Fanuc/Fii robots, Metropolis/Cosmos agents for monitoring/onboarding.

Humanoids robotics partnerships with Figure AI ($40B valuation), Agility (warehouses), J&J (surgery), Disney (Newton simulator for “Blue” robot demo—adorable physics sim).

Robotaxis partners will use Drive Hyperion (new chips). Uber and Nvidia will work to get 100,000 robotaxi around 2027. Standardized AV (autonomous vehicle) platform (sensors/compute for Lucid, Mercedes, Stellantis). Enables AV devs (Wayve, Waabi) on common chassis.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.