Inside a laboratory nestled in the foothills of the Rocky Mountains, amid a labyrinth of lenses, mirrors, and other optical machinery bolted to a vibration-resistant table, an apparatus resembling a chimney pipe rises toward the ceiling. On a recent visit, the silvery pipe held a cloud of thousands of supercooled cesium atoms launched upward by lasers and then left to float back down. With each cycle, a maser—like a laser that produces microwaves—hit the atoms to send their outer electrons jumping to a different energy state.

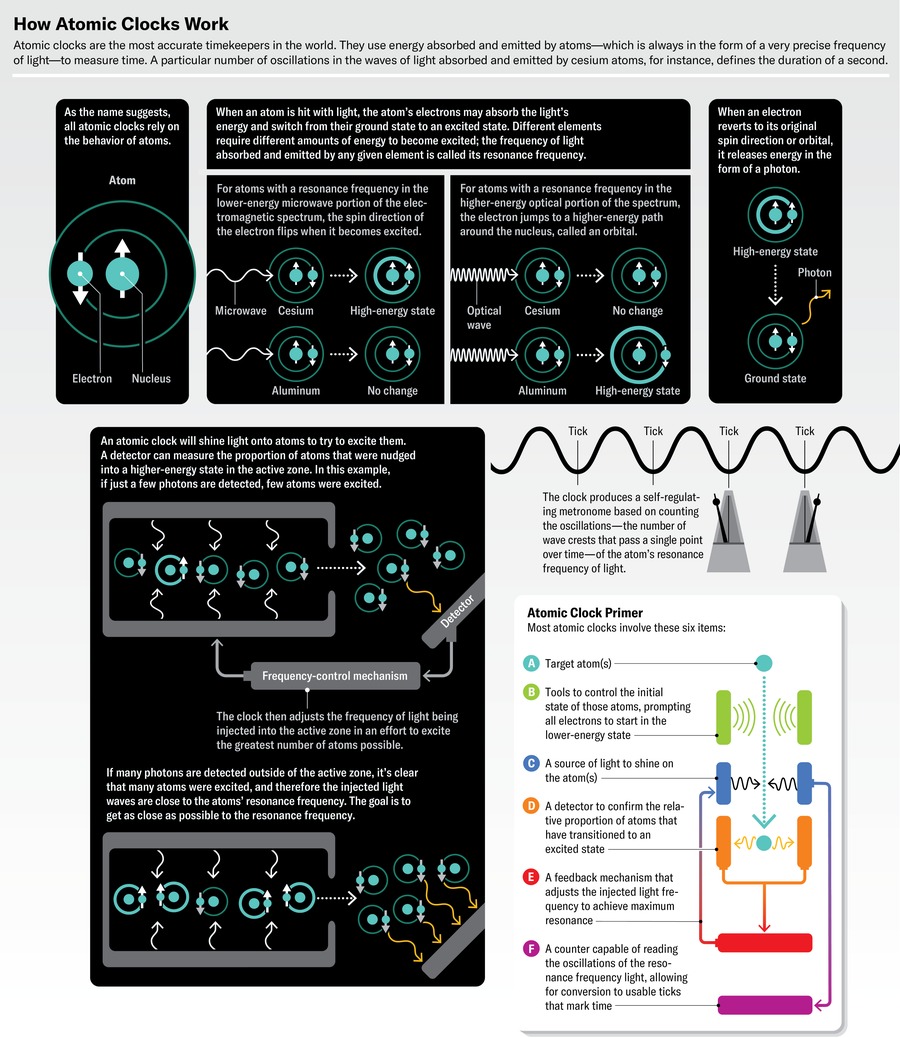

The machine, called a cesium fountain clock, was in the middle of a two-week measurement run at a National Institute of Standards and Technology (NIST) research facility in Boulder, Colo., repeatedly fountaining atoms. Detectors inside measured photons released by the atoms as they settled back down to their original states. Atoms make such transitions by absorbing a specific amount of energy and then emitting it in the form of a specific frequency of light, meaning the light’s waves always reach their peak amplitude at a regular, dependable cadence. This cadence provides a natural temporal reference that scientists can pinpoint with extraordinary precision.

By repeating the fountain process hundreds of thousands of times, the instrument narrows in on the exact transition frequency of the cesium atoms. Although it’s technically a clock, the cesium fountain could not tell you the hour. “This instrument does not keep track of time,” says Vladislav Gerginov, a senior research associate at NIST and the keeper of this clock. “It’s a frequency reference—a tuning fork.” By tuning a beam of light to match this resonance frequency, metrologists can “realize time,” as they phrase it, counting the oscillations of the light wave.

On supporting science journalism

If you’re enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

The signal from this tuning fork—about nine gigahertz—is used to calibrate about 18 smaller atomic clocks at NIST that run 24 hours a day. Housed in egg incubators to control the temperature and humidity, these clocks maintain the official time for the U.S., which is compared with similar measurements in other countries to set Coordinated Universal Time, or UTC.

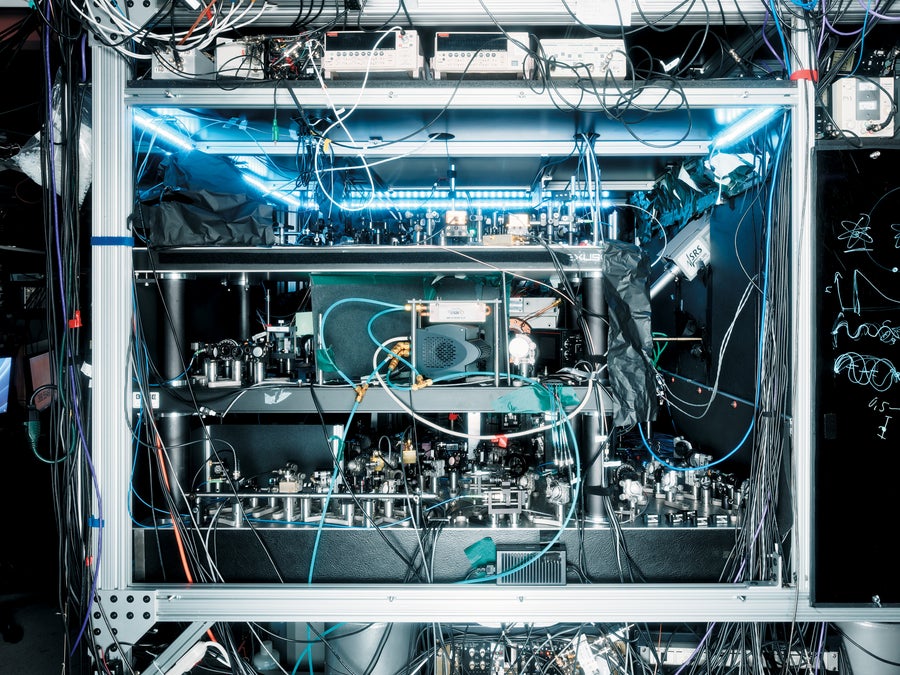

A thorium nuclear clock resides at the JILA laboratory in Colorado.

Jason Koxvold

Gerginov, dressed casually in a short-sleeve plaid shirt and sneakers, spoke of the instrument with an air of pride. He had recently replaced the clock’s microwave cavity, a copper passageway in the middle of the pipe where the atoms interact with the maser. The instrument would soon be christened NIST-F4, the new principal reference clock for the U.S. “It’s going to be the primary standard of frequency,” Gerginov says, looking up at the metallic fountain, a three-foot-tall vacuum chamber with four layers of nickel-iron-alloy magnetic shielding. “Until the definition of the second changes.”

Since 1967 the second has been defined as the duration of 9,192,631,770 cycles of cesium’s resonance frequency. In other words, when the outer electron of a cesium atom falls to the lower state and releases light, the amount of time it takes to emit 9,192,631,770 cycles of the light wave defines one second. “You can think of an atom as a pendulum,” says NIST research fellow John Kitching. “We cause the atoms to oscillate at their natural resonance frequency. Every atom of cesium is the same, and the frequencies don’t change. They’re determined by fundamental constants. And that’s why atomic clocks are the best way of keeping time right now.”

But cesium clocks are no longer the most accurate clocks available. In the past five years the world’s most advanced atomic clocks have reached a critical milestone by taking measurements that are more than two orders of magnitude more accurate than those of the best cesium clocks. These newer instruments, called optical clocks, use different atoms, such as strontium or ytterbium, that transition at much higher frequencies. They release optical light, as opposed to the microwave light given out by cesium, effectively dividing the second into about 50,000 times as many “ticks” as a cesium clock can measure.

The fact that optical clocks have surpassed the older atomic clocks has created something of a paradox. The new clocks can measure time more accurately than a cesium clock—but cesium clocks define time. The duration of one second is inherently linked to the transition frequency of cesium. Until a redefinition occurs, nothing can truly be a more accurate second because 9,192,631,770 cycles of cesium’s resonance frequency is what a second is.

Atomic clock scientist Jun Ye of JILA hopes such nuclear clocks can eventually beat today’s most accurate timekeepers.

Jason Koxvold

This problem is why many scientists believe it is time for a new definition of the second. In 2024 a task force set up by the International Bureau of Weights and Standards (BIPM), headquartered in Sèvres, France, released a road map that established criteria for redefining the second. These include that the new standard is measured by at least three different clocks at different institutions, that those measurements are routinely compared with values from other types of clocks and that laboratories around the world will be able to build their own clocks to measure the target frequency. If sufficient progress is made on the criteria in the next two years, then the second might change as soon as 2030.

But not everyone is onboard with redefining the second now. For one thing, there’s no clear immediate benefit. Today’s cesium clocks are plenty accurate enough for most practical applications—including synchronizing the GPS satellites we all depend on. We can always improve the accuracy of the second later if new innovations come along that require better timing. “Today we don’t really profit from an immediate change,” says Nils Huntemann, a scientist at the Physikalisch-Technische Bundesanstalt (PTB), the national metrology institute of Germany. Redefining the second wouldn’t be straightforward, either—scientists would be forced to pick a new standard from the many advanced atomic clocks now in existence, with improvements being made all the time. How should they choose?

Many scientists say we should improve the definition of time simply because we can.

Regardless of the complications, some physicists believe that they have an obligation to use the best clocks available. “It’s just a matter of basic principle,” says Elizabeth Donley, chief of the time and frequency division at NIST. “You want to allow for the best measurements you can possibly make.”

The world’s first clocks were invented thousands of years ago, when the first human civilizations devised devices that tracked the sun’s movement to divide the day into intervals. The earliest versions of sundials were made by the ancient Egyptians around 1500 B.C.E. Later, water clocks, first used by Egyptians and called clepsydras, meaning “water thieves,” by the ancient Greeks, marked time by letting water drain out of vessels with a hole punched in the bottom. These instruments were perhaps the first to measure a duration of time independent of the movements of celestial bodies. Mechanical clocks driven by weights debuted in medieval European churches, and they ticked along at consistent rates, leading to the modern 24-hour day. The tolling of bells to mark the hour even gave us the word “clock,” which has its roots in the Latin clocca, meaning “bell.”

As mechanical clocks became more precise, particularly with the development of the pendulum clock in the mid-17th century, timekeepers further divided the hour into minutes and seconds. (First applied to angular degrees, the word “minute” comes from the Latin prima minuta, meaning the “first small part,” and “second” comes from secunda minuta, the “second small part.”) For centuries towns maintained their own local clocks, adjusting them periodically so the strike of noon occurred just as the sundial indicated midday. It wasn’t until the 19th century, when distant rail stations needed to maintain coordinated train schedules, that time zones were established and timekeeping was standardized around the world.

Jen Christiansen; Sources: Elizabeth Donley and John Kitching/National Institute of Standards and Technology (scientific reviewers)

Clocks improved drastically in the 20th century after French physicists and brothers Jacques and Pierre Curie discovered that applying an electric current to a crystal of quartz causes it to vibrate with a stable frequency. The first clock that used a quartz oscillator was developed by Warren Marrison and Joseph Horton of Bell Laboratories in 1927. The clock ran a current through quartz and used a circuit to divide the resulting frequency until it was low enough to drive a synchronous motor that controlled the clock’s face. Today billions of quartz clocks are produced every year for wristwatches, mobile devices, computers, and other electronics.

The key innovation that led to atomic clocks came from American physicist Isidor Isaac Rabi of Columbia University, who won the Nobel Prize in Physics in 1944 for developing a way to precisely measure atoms’ resonance frequencies. His technique, called the molecular-beam magnetic resonance method, finely tuned a radio frequency to cause atoms’ quantum states to transition. In 1939 Rabi suggested using this method to build a clock, and the next year his colleagues at Columbia applied his technique to determine the resonance frequency of cesium.

This element was viewed as an ideal reference atom for timekeeping. It’s a soft, silvery metal that is liquid near room temperature, similar to mercury. Cesium is a relatively heavy element, meaning it moves more slowly than lighter elements and is therefore easier to observe. Its resonance frequency is also higher than those of other clock candidates of the time, such as rubidium and hydrogen, meaning it had the potential to create a more precise time standard. These properties eventually won cesium the role of defining the second nearly 40 years later.

But the first atomic clock was not a cesium clock. In 1949 Harold Lyons, a physicist at NIST’s precursor, the National Bureau of Standards (NBS), built an atomic clock based on Rabi’s magnetic resonance method using ammonia molecules. It looked like a computer rack with a series of gauges and dials on it, so Lyons affixed a clockface to the top for a public demonstration to indicate that his machine was, in fact, a clock. This first atomic clock, however, couldn’t match the precision of the best quartz clocks of the time, and ammonia was abandoned when it became clear that cesium clocks would produce better results.

A cloud of strontium atoms is seen in an optical lattice clock at the German national metrology institute Physikalisch-Technische Bundesanstalt.

Christian Lisdat/PTB

Both the NBS and the National Physical Laboratory (NPL) in the U.K. developed cesium beam clocks in the 1950s. A key breakthrough came from Harvard University physicist Norman Ramsey, who found that it was possible to improve the measurements by using two pulses of microwaves to induce the atomic transitions rather than one. Cesium clocks continued to advance for the remainder of the century and, along with atomic clocks using different elements, became more precise and more compact.

At the time, the second was defined according to astronomical time. Known as the ephemeris second, it was equal to 1/31,556,925.9747 of the tropical year (the time it takes for the sun to return to the same position in the sky) in 1900. Between 1955 and 1958, NPL scientists compared measurements from their cesium beam clock with the ephemeris second as measured by the U.S. Naval Observatory by tracking the position of the moon with respect to background stars. In August 1958 the second was calculated as 9,192,631,770 cycles of the cesium transition frequency—the same number that would be used for the new definition nine years later.

Since then, atomic clocks have continued to progress, particularly with the development of cesium fountain clocks in the 1980s. But by 2006 newer clocks were beating them.

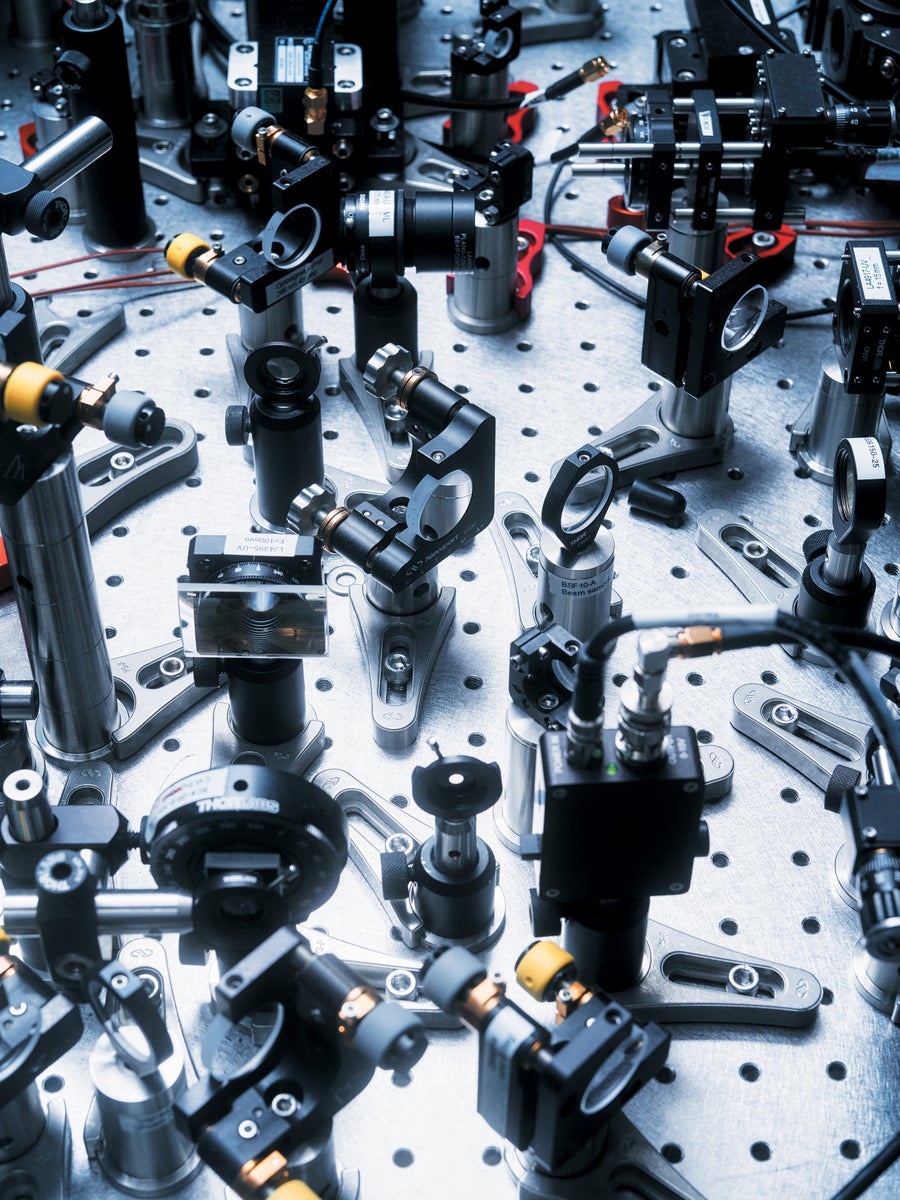

In addition to the clocks at NIST, some of the most advanced timekeepers in the world can be found at the University of Colorado Boulder, down the street in another lab pushing the frontier of timekeeping. JILA, a joint venture of NIST and the university, houses four “optical lattice clocks” that are among the global record holders for accuracy. (The lab was previously called the Joint Institute for Laboratory Astrophysics and now is simply known by the acronym.)

These state-of-the-art instruments are housed in large rectangular boxes with sliding doors that double as dry-erase boards, each covered in equations and diagrams. Components twinkle in the dim light of the lab as lasers and readout devices pulse with light.

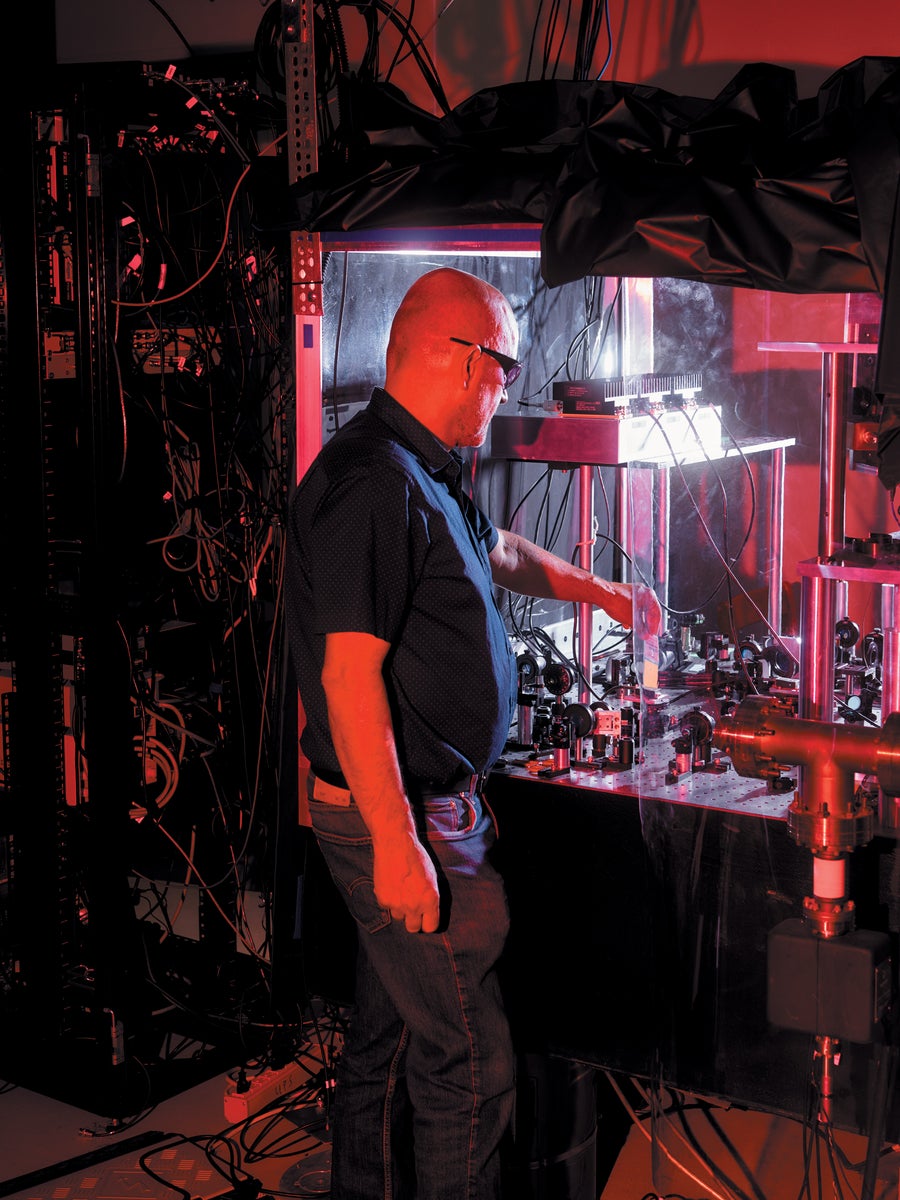

Elizabeth Donley is chief of the time and frequency division at the U.S. National Institute of Standards and Technology.

Jason Koxvold

Each clock works by firing two lasers at each other to create an interference pattern called an optical lattice, a grid with areas of high and low intensity. Pancake-shaped clouds of thousands of neutral strontium atoms become trapped in the high-intensity parts of the lattice, suspended in place.

Another laser then induces an electron transition in the atoms, pushing the outer electrons up an entire orbital level. This is a larger transition than occurs in the cesium atoms, where the electrons only move up one “hyperfine” level. But as in the cesium clock, detectors look for photons released when the electrons settle back to their original states to confirm that the laser is at the correct frequency to make the electrons hop. Compared with the cesium transition, which occurs at about nine billion hertz, the strontium transition requires a much higher frequency: 429,228,004,229,873.65 Hz.

Each of the four clocks in the lab serves a different purpose, measuring interactions between the atoms or effects from the environment—such as gravity, temperature fluctuations or wayward electromagnetic fields—in an attempt to reduce these sources of uncertainty. Optical clocks are so sensitive that the slightest disturbance, even someone slamming a nearby door, will shift the target transition frequency.

The key limiting factor in an optical lattice clock is blackbody radiation, says Jun Ye, lead researcher of the JILA lab. This radiation is the thermal energy released by any body of mass because of its temperature alone. To compensate for this effect, Ye and his team built a new thermal-control system inside the vacuum chamber of one of the clocks, a “fairly heroic effort” that Ye attributes to his students. The project allowed them to measure the transition frequency of strontium with a systematic uncertainty of 8.1 × 10–19, the most accurate clock measurement ever made. This strontium optical lattice clock and other, similar models are now among the leading candidates to redefine the second.

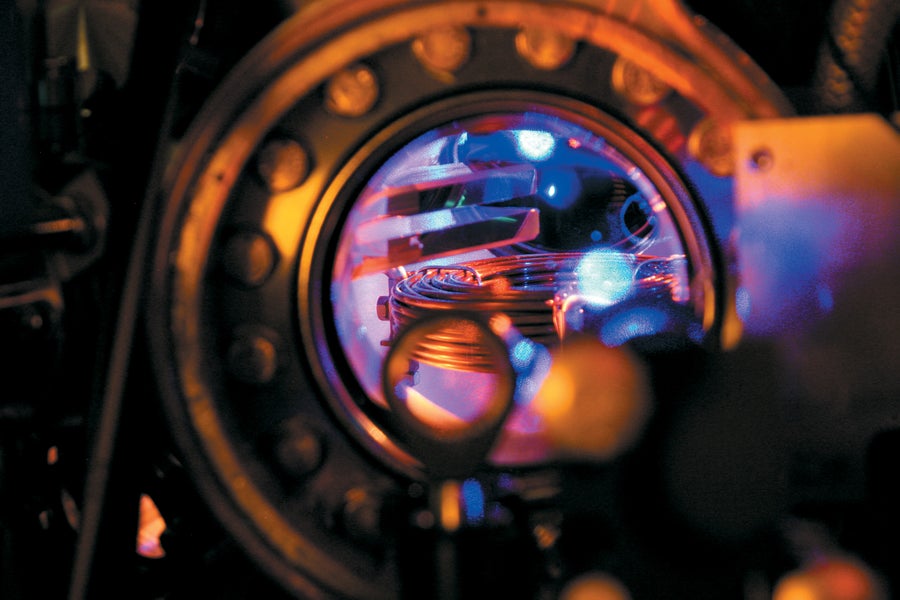

Cesium fountain clocks use a maze of lasers to control and measure atoms.

Jason Koxvold

The other main contenders are called single-ion clocks. Some of the best examples can be found at NIST and at the German PTB lab. This type suspends one charged ion (in this case, an atom with one or more electrons removed so that it carries a positive charge) within a trap of electromagnetic fields and then induces an atomic transition with a laser. Currently the most accurate of these clocks uses an aluminum ion.

Single-ion clocks avoid the noise that light lattices introduce to a system, Huntemann says, and “there is generally a smaller sensitivity to external fields,” including fields in the experiment as well as the environment. Optical lattice clocks, however, scrutinize thousands of atoms at once, improving accuracy.

Huntemann is researching ways to trap and measure multiple ions at once, such as strontium and ytterbium ions, within the same clock. This approach would allow scientists to probe two different atomic transitions simultaneously, and the clock could average its frequency measurements more quickly—though not as fast as an optical lattice clock.

Ion clocks and optical lattice clocks have been trading the accuracy record back and forth for the past two decades. They have even demonstrated how time passes faster at higher elevations—a prediction from Einstein’s general theory of relativity, which showed that time dilates, or stretches, closer to large masses (in this case, Earth). In a 2022 experiment, parts of a strontium optical lattice clock at JILA separated by just a millimeter in height measured a time difference on the order of 0.0000000000000000001 (10–19). This tiny aberration would have been too small for a cesium clock to detect.

If scientists choose to redefine the second, they must decide not only which clock to use but also which atomic transition: that of strontium atoms or ytterbium or aluminum ions—or something else. One possible solution is to base the definition on not just one atomic transition but the average of all the transitions from different kinds of optical clocks. If an ensemble of clocks, each with its own statistical weighting, is used to redefine the second, then future clocks could be added to the definition as needed.

Vladislav Gerginov works on one such clock called NIST-F4 at NIST’s Colorado campus.

Jason Koxvold

Last year Ye and his team demonstrated the viability of a nuclear clock based on thorium. This type of clock uses a nuclear transition—a shift in the quantum state of atomic nuclei—rather than an electron transition. Because nuclei are less sensitive to external interference than electrons are, nuclear clocks may become even more accurate than optical clocks once the technology is refined.

If the second doesn’t get redefined in 2030, scientists can try again in 2034 and 2038 at the next two meetings of the General Conference on Weights and Measures. A new definition won’t change much, if anything, for most people, but it will eventually and inevitably lead to technological advances. Researchers are already dreaming up applications such as quantum communication networks or upgraded GPS satellites that could pinpoint any location on Earth to within a centimeter. Other uses are just starting to be envisioned.

By pushing clocks forward, scientists may do more than redefine time—they might redefine our understanding of the universe. Supersensitive clocks that can detect minute changes in the passing of time—as shown in the time-dilation experiment—could be used to detect gravitational waves that pass through Earth as a consequence of massive cataclysms in space. By mapping the gravitational distortion of spacetime more precisely than ever, such clocks could also be used to study dark matter—the missing mass thought to be ubiquitous in the cosmos—as well as how gravity interacts with quantum theory.

Such endeavors could even rewrite our understanding of time itself—which has always been a more complicated notion in physics than in practical life. “The underlying classical laws say that there is no intrinsic difference between the past and future nor any intrinsic direction of determination from past to future,” says Jenann Ismael, a philosopher of science at Johns Hopkins University.

In any case, now that we have clocks that outstrip the literal definition of the second, many scientists say the way forward is obvious: we should improve the definition of time simply because we can. “As with any new idea in science, even if it is not exactly clear who needs a better measurement, when a better measurement is available, then you find the application,” says Patrizia Tavella, director of the time department at BIPM, the organization that defines the International System of Units. “We can do better,” she says of the current second. “Let’s do better.”